I know, I know ... more content about GPT4 // BrXnd Dispatch vol. 013.5

Coding with GPT4, running models locally, and HANDS!

You’re getting this email as a subscriber to the BrXnd Dispatch, a bi-weekly email at the intersection of brands and AI. On May 16th, we’re holding the BrXnd Marketing X AI Conference. Early bird tickets are 20% off until 4/1. If you’d like to learn more or sponsor the event, please be in touch.

Lots to talk about this Friday, let’s dive in. (Of course, if you missed Tuesday’s email, I released Ad-Venture, a text game set in the smoke-filled halls of a 1960s ad agency.)

The biggest news this week is surely the release of GPT4. At this point, I’m sure you’ve read/heard plenty about it, but I can’t help but relay my experience that seems instructive on what the new model is particularly good at.

One of the things I was dealing with this week was getting some instrumentation set up. I want to be able to keep track of a few things happening around the set of BrXnd sites I’m now managing, and to do that, I was trying to set up RudderStack, a CDP, with Next.js, the React framework I use for most of everything. Because of some quirks of Next.js, it wasn’t playing nice with Rudderstack—despite RudderStack having documentation they claimed would work. I reached out to their support folks on Slack but couldn’t get anywhere, and I even hit up some Next.js folks out of desperation on Discord. I just couldn’t crack it.

So, feeling a bit fed up, I figured I’d ask GPT4 (via ChatGPT+). Worst case scenario, it wouldn’t work, and I’d learn something about GPT4. Best case scenario, it would solve the problem.

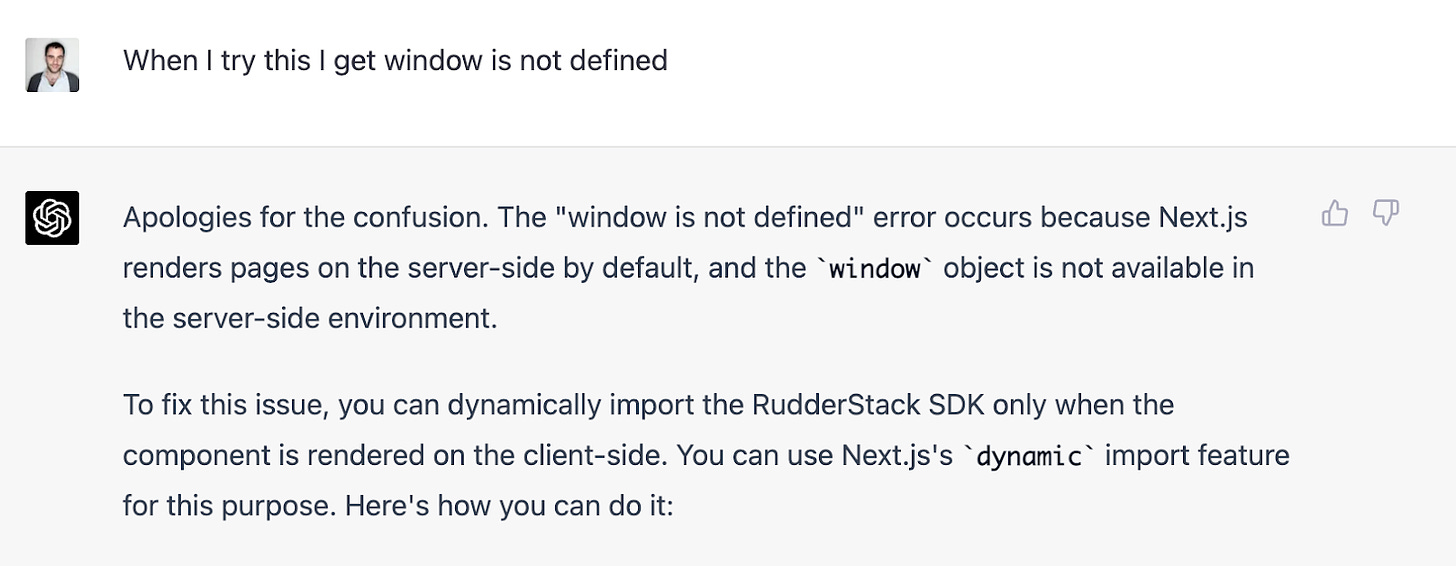

I started about as broad as possible by inputting, “I need to figure out how to use rudder-sdk-js with next.js.” Off the jump it was interesting because it gave me a fundamentally different approach than the RudderStack docs had provided. I had already spent the day Googling and found nothing resembling what GPT4 recommended. So I tried it, and … it didn’t work. Same error: “window is not defined.” So I told GPT4.

First, the answer was fascinating, and something I saw repeated throughout the conversation: “Apologies for the confusion.” But then it went on to offer a different approach using Next.js’s dynamic import feature. This was interesting and made some sense to me, so I followed along. This time I got a different error. We were making progress!

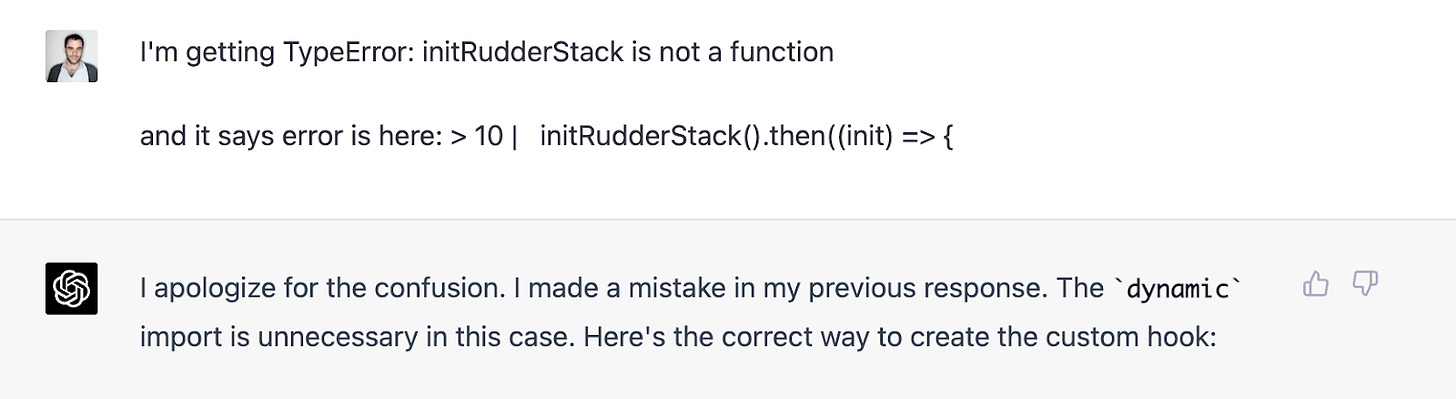

This time it acknowledges it made a mistake. Clearly, this is part of its training, but it’s still interesting and gives a bit of a feeling of working through this problem like a human—trying things, getting feedback, and trying more things. Anyway, I copied its code again, and now I was back to my “window is not defined” error. But this time, it was happening for a different reason. We were moving forward. After two or three more back-and-forths, things seemed to be working. Amazing!

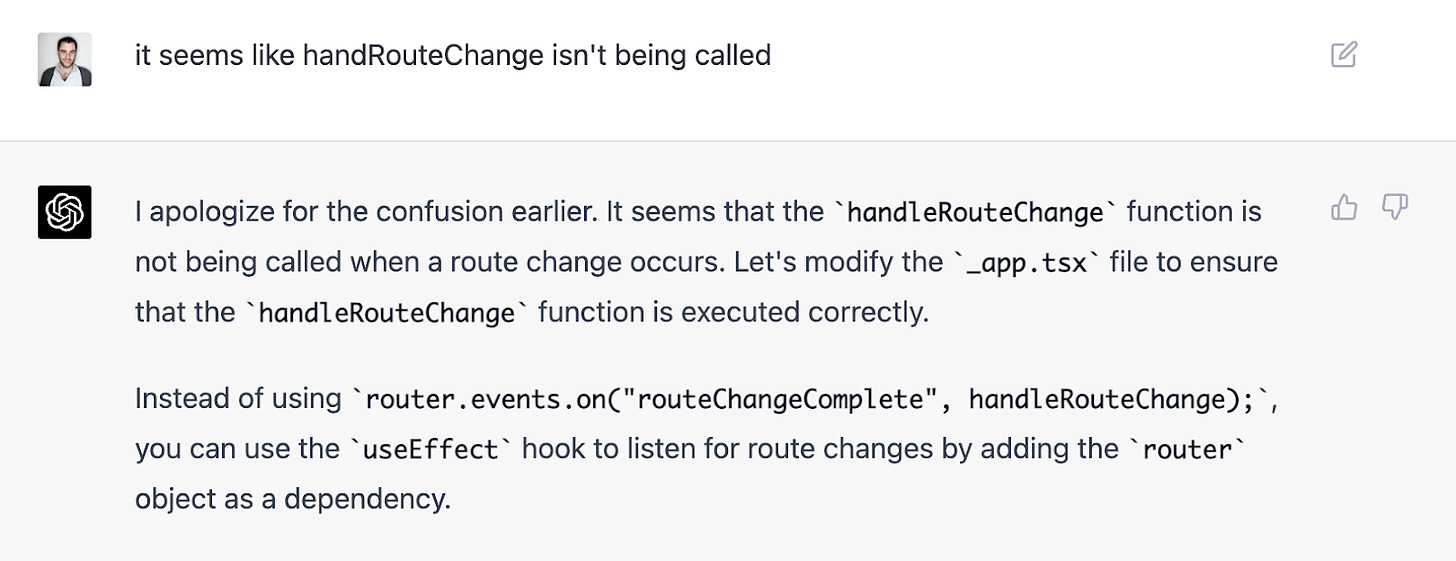

But they weren’t. RudderStack is supposed to make a call on load, and it wasn’t doing that. I wasn’t getting the same error, but it still wasn’t working. So without an error to relay, I just told GPT4 the situation.

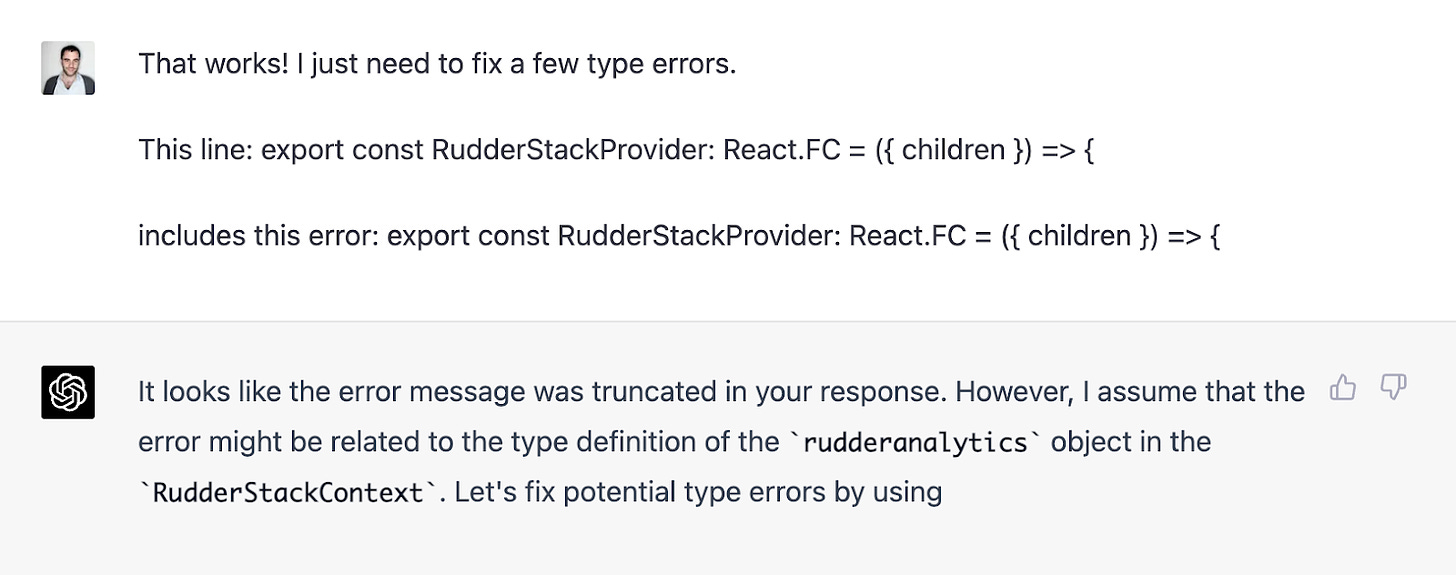

And so we continued like this—GPT4 offering me some code and me relaying what was happening. I was running into new and different problems, which honestly felt like progress. It really felt like a partnership: I needed to feed it some info about what the problem was so it could help me debug it. After a few rounds, it changed paths, and now we were really on the right track. After a few more back-and-forths, it was working!

It really did solve the whole thing. I checked the solution with a much more talented programmer than myself, and he was impressed.

So what do I take away from all this? A few things:

While the output is obviously better (getting clean code out of earlier versions wasn’t always easy), it feels like the model’s ability to understand inputs is the differentiator. I tried a bunch of other kinds of prompts today, and that seemed to be a consistent theme. Sure, it writes a little cleaner and more interesting, but it really felt on the first pass that the thing it was doing fundamentally different than previous versions was better interpreting my input. I need to play more to have a better read on that, but it’s interesting to think about the implications for prompt engineering.

I was the middleman here—taking the code it gave me and relaying errors or system information. But it’s not hard to imagine a scenario where it’s just hooked directly up and does these rounds itself.

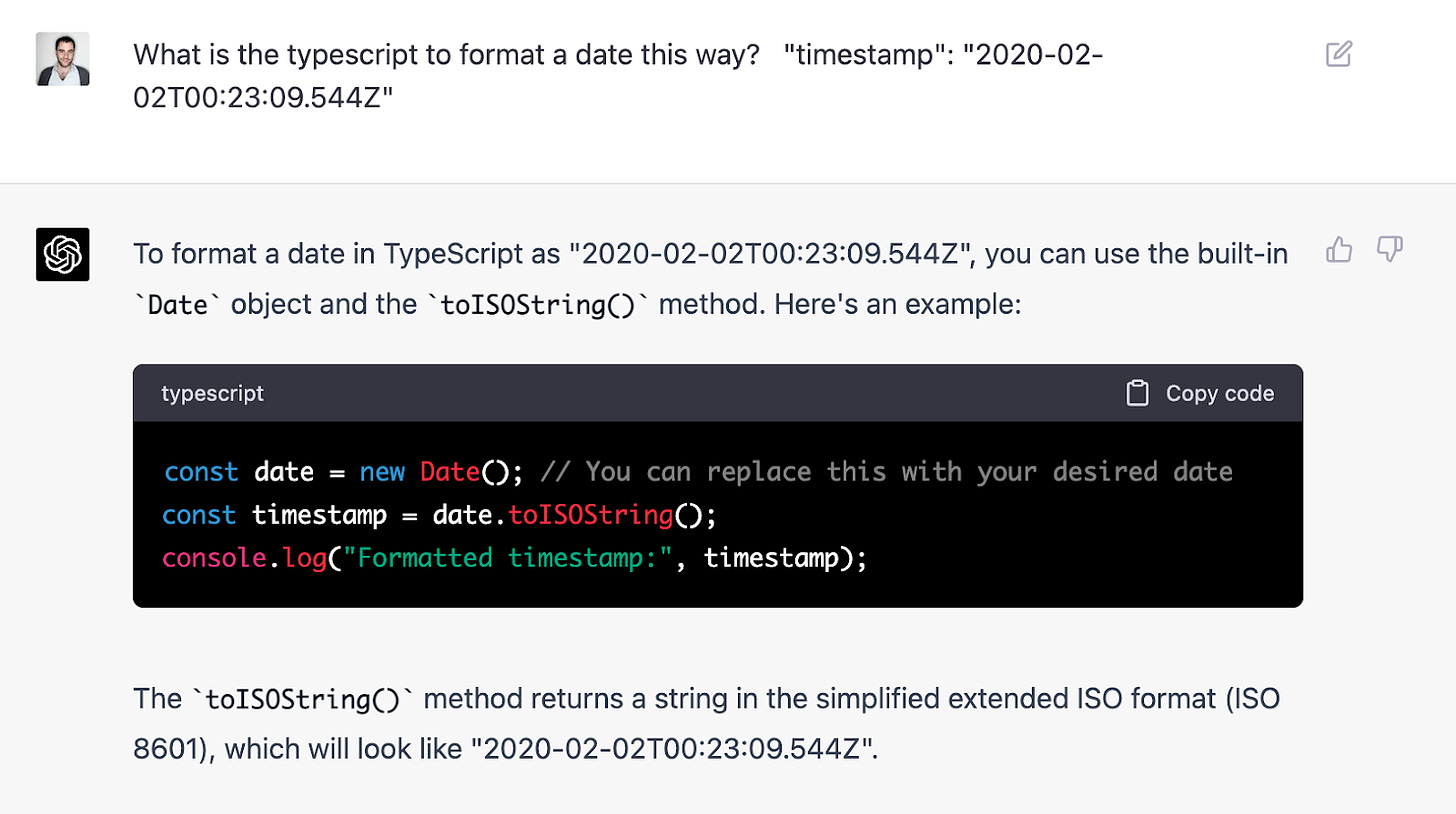

For a while, I’ve been saying for a while that I think the kinds of products this will most disrupt are sites like StackOverflow rather than Google. This new model proved that for me. I spent the day asking GPT4 small coding questions rather than looking them up on Google, and it gave the correct answer every time. Google doesn’t lose much in this arrangement as it’s not a particularly valuable search anyway. These were things as simple as how to format a date string according to a specific format (this one stumps me every time).

Okay, enough of that. Let’s talk about some links.

[Garbage Day] Ryan Broderick on GPT4 and generative AI’s “iPhone moment.”

[Simon Willison] While this week's news mainly focused on GPT4 and Midjourney v5, possibly more interesting is all the energy around LLaMA, the Meta AI model. It’s open source-ish (you have to request access to the weights, though they’ve leaked online already). But what’s particularly fascinating is how people are finding various ways to run it locally. I agree with Simon Willison that this feels like a big shift that’s about to happen:

It feels to me like that Stable Diffusion moment back in August kick-started the entire new wave of interest in generative AI—which was then pushed into over-drive by the release of ChatGPT at the end of November.

That Stable Diffusion moment is happening again right now, for large language models—the technology behind ChatGPT itself.

This morning I ran a GPT-3 class language model on my own personal laptop for the first time!

AI stuff was weird already. It’s about to get a whole lot weirder.

[Github] Per the above, here’s a repo to run Alpaca locally, which is a version of LLaMA fine-tuned to act like GPT3. Someone in the BrXnd Discord has been playing with getting all these running on his Macbook, and maybe I can convince him to write something.

[Midjourney] Speaking of Midjourney v5. I had a play with generating some new X’s. My general takeaway, like GPT4, is that it’s much better at direction following, especially regarding backgrounds. (I added the BRND afterward.)

Prompt: “a sandwich from the fast food restaurant subway in the shape of the letter X, studio photography, white background --v 5”

This one was even simpler: “the letter X in the style of Doritos --version 5”

[Twitter] The new Midjourney model can also do hands!

[The Atlantic] Charlie Warzel has a good brain dump of his current thoughts on AI. I particularly enjoyed hearing from Melanie Mitchell, who I think is one of the most cogent voices in the space.

Melanie Mitchell, a professor at the Santa Fe Institute who has been researching the field of artificial intelligence for decades, told me that this question—whether AI could ever approach something like human understanding—is a central disagreement among people who study this stuff. “Some extremely prominent people who are researchers are saying these machines maybe have the beginnings of consciousness and understanding of language, while the other extreme is that this is a bunch of blurry JPEGs and these models are merely stochastic parrots,” she said, referencing a term coined by the linguist and AI critic Emily M. Bender to describe how LLMs stitch together words based on probabilities and without any understanding. Most important, a stochastic parrot does not understand meaning. “It’s so hard to contextualize, because this is a phenomenon where the experts themselves can’t agree,” Mitchell said.

[NYTimes] Chomsky on LLMs.

I think that’s about it for this week. Get your conference tickets! It’s shaping up to be great. I also have several sponsor spots available, and I would hugely appreciate you sharing the opportunity with folks who might be interested (reply to this email or get in touch, and I’ll share the details). I’m paying for this whole thing out of my pocket and am just trying not to lose any money on this first one.

Thanks, and join us on Discord!

— Noah